Hack the Senses projects were showcased in September 2017 at the Victoria and Albert Museum's Digital Design Weekend and at the New Scientist Live expo. We presented three installations, which received very positive and encouraging feedback.

The first installation was called 'Is Lavender Blue? A Chromatic Scentscape'. It was built around the idea that cultures throughout history have used colours to express emotions, power relations, danger and a host of other meanings. Odours and scents are also strong, yet sometimes subtle triggers of attraction and repulsion, which convey a lot of information. But what happens when we try to pair them? What is the colour of a scent? How do our memories, personal experiences and learned associations influence our choice? Or is there a colour ‘inherent’ to smells themselves, which we all perceive similarly? Drawing on research in crossmodal psychology, this mini-experiment invited the audience to explore the relationship between their sense of smell and their perception of colours.

The second installation was entitled 'Self-Constructor' and it investigated the idea of 'having' or 'being' a coherent self. What does it mean to be a self? As our lives are dispersed into ever more digital representations of who we are, is it ever possible to construct a coherent self-image? Or should we accept instead that it is bound to remain fragmented forever? This out-of-body VR experience provided visitors an opportunity to put all their pieces together.

For this piece we built a custom camera, which consisted of a stereoscopic unit and a third camera connected to a Raspberry Pi. The 3D camera sent its image to an Oculus virtual reality headset. Putting on the headset, a person could see themselves from the outside, the point of view of the camera. We had the person sit on a meditation pillow placed behind a tilted easel. On the easel we had 8 custom built blocks, which each housed a microcomputer and an LCD screen. On the blocks we showed the same live video image as in the VR headset but the picture was fragmented and distributed over the 8 blocks, so we ended up with an 8-piece puzzle showing a live-image of the person wearing the VR headset. The task was to pick up the blocks and arrange them in a such way that the image was aligned correctly. In other words, we built a recursive puzzle where the participant was observing themselves from the third-person perspective as they were putting together a puzzle of themselves.

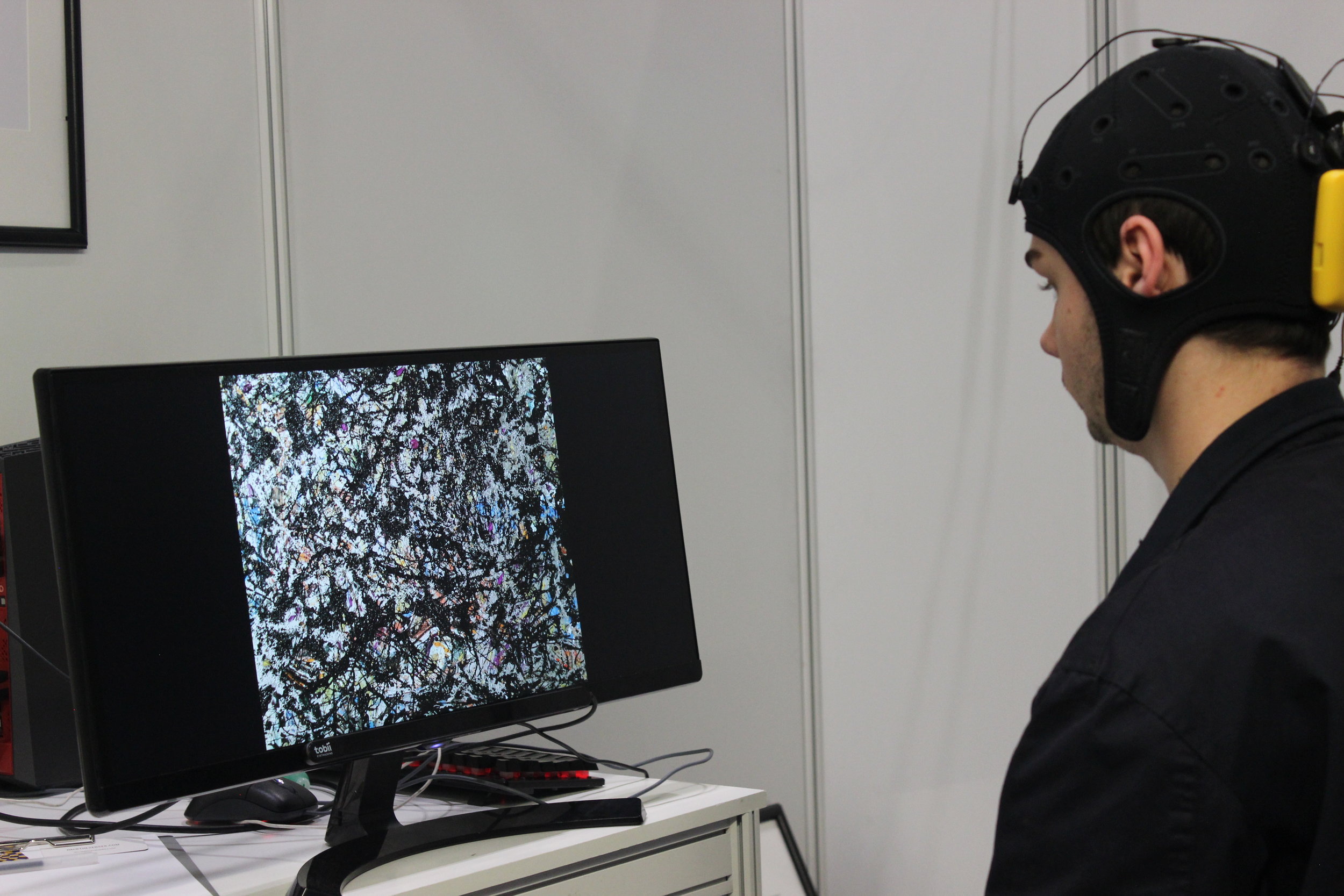

The third piece was called AAI for Aesthetic Artificial Intelligence. The creation and appreciation of art is arguably one of the most human traits. Various computer programs can already generate outputs that may be interpreted as works of art, questioning the meaning and role of creativity. However, is the enjoyment of art something that a computer could also acquire? This piece is a work in progress. It seeks to teach an AI how humans look at and respond to works of visual art. The viewer’s eye movements and brain activity are being recorded as they are looking at paintings. These are interpreted as the subjective effects that the pieces are having on the viewer. Drawing on these biometric signals, visual effects will be applied systematically to the images, resulting in unique (or perhaps uniform) versions of the originals. This data is used to train the AI to develop a model of human art appreciation. This installation was supported by Starlab Barcelona SLU, data analysis service provider, and Neuroelectrics, neurotechnology device provider.